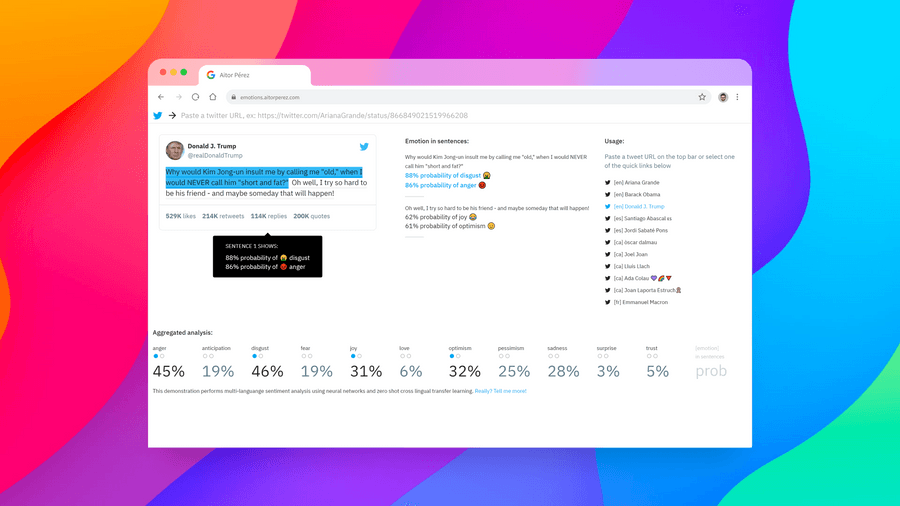

Emotions in tweets

A machine learning exploration of emotions in short texts on any language

Machine Learning

Natural Language Processing (NLP)

Python

Google Cloud Functions

React

On a capstone project for an artificial intelligence course I wanted to get a practical experience with:

- Programming a multilayer perceptron (MLP)

- Using a publicly available datasetfor training and testing

- Solving a multi-label classification task

- Putting the AI system to use

After doing some research (mainly about Natural Language Processing, due to personal interest) I discovered 2 things that caught my attention:

- LASER, an AI system from facebook that maps sentences to a 1024-dimensional space with the additional benefit that sentences of similar meaning across 93 languages get mapped nearby on the embedding space

- A dataset of tweets for sentiment analysis which includes annotations for intensity (regression and ordinal classification for 4 emotions), polarity (regression and ordinal classification), presence or absence of emotion (multi-labelling of 11 emotions)

I thought it would be interesting to try to put together a machine learning system capable of detecting emotion in any language, and I built it. Then I designed a website to interact with it and showcase the prototype.

Product objectives

The application must:

- Explain clearly the process

- Demonstrate the technology

- Let the user have fun with this AI

Features and functions

Possible features that could be designed for the user:

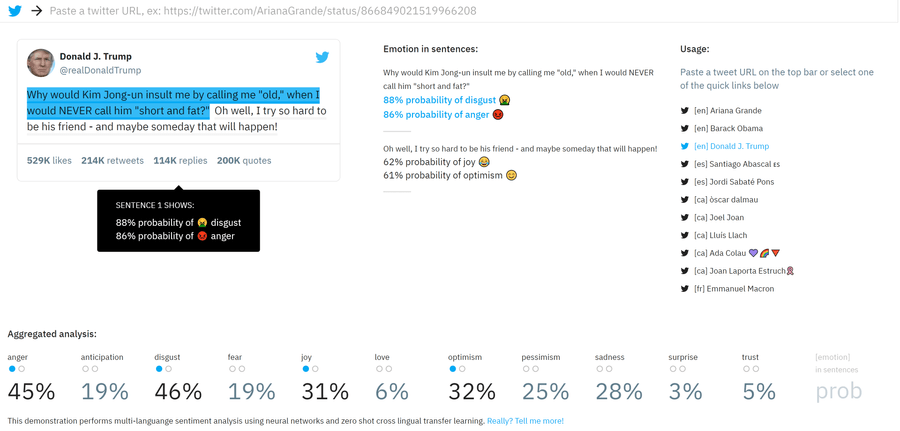

- Input arbitrary short texts and have them analysed by the AI

- Choose a short text from a predefined list of text

- Retrieve short text from a third party service

Given that the machine learning system was trained with a dataset of tweets, and that Twitter provides a public API to access its content, the natural decision was to select Twitter as a source of content (but it could have been any other source).

A side-benefit was that tweets are restricted in length, limiting the number of sentences of the text (and that has a direct impact on the resources needed to run the algorithm, which is currently deployed on a free tier of cloud computing).

Arbitrary text was discarded for the same reasons of length, and to avoid having to implement security over arbitrary inputs.

Flow

To be as easy to get started as possible, the application immediately gets a random example when the user lands on the website and runs the algorithm without user intervention. From then, the user should be able to:

- See the results and inspect the process

- Paste a new tweet URL and try again

- Select another predefined example and try again

- Get to the documentation to learn how it works under the hood

Interface elements

The best way to explain how an artificial intelligence system works is by trying to break down its process in small, easy-to-understand steps that can be presented to the user. Then visualize them and their outputs.

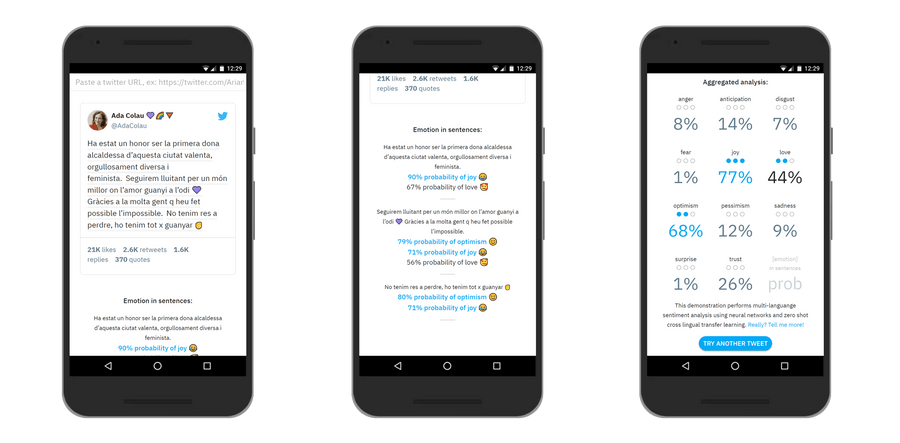

When the user selects a tweet (either pasting its link or using one of the preloaded examples) its full raw text is retrieved. An element to display the tweet’s contents is needed.

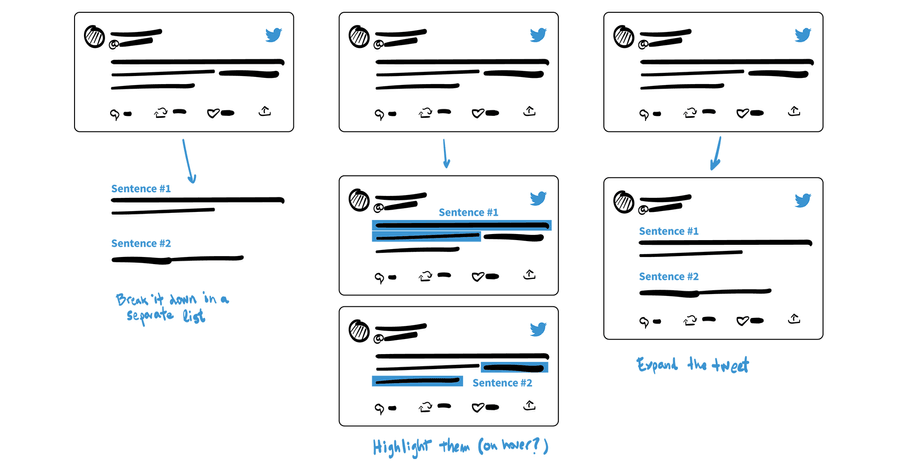

This text is then sent to a server that breaks it down into sentences. A visual representation of the sentences is needed.

The server transforms each of this sentences to vectors of 1024 values. These values represent the "meaning" of the sentence independent of the language: "I love you" and "Je t'aime" get almost the same 1024 values. These are the sentence embeddings. This is an internal process that would do more harm than good to the user if visualized.

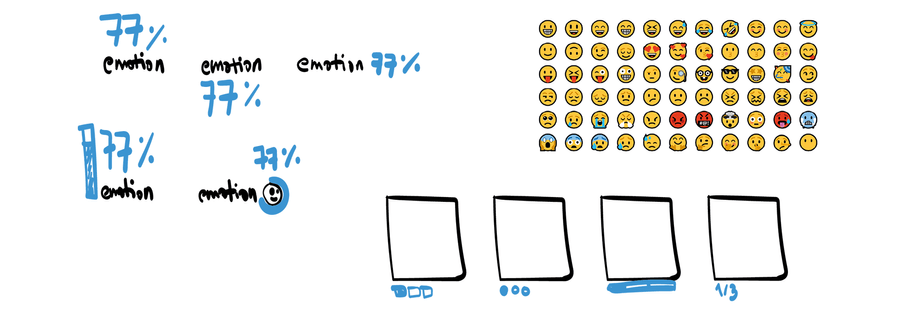

Next, the server uses this values as inputs of the 11 neural networks that we have trained for each specific task and results in a probability for each one of the 11 emotions being present on each sentence. Visual indicators for each singular emotion are needed.

Finally, the emotions detected with confidence above a certain threshold are predicted for this tweet. A summary with these findings is needed.

Visual treatment

The application emulates an embedded tweet and adopts Twitter’s palette to establish a clear visual relationship.